@

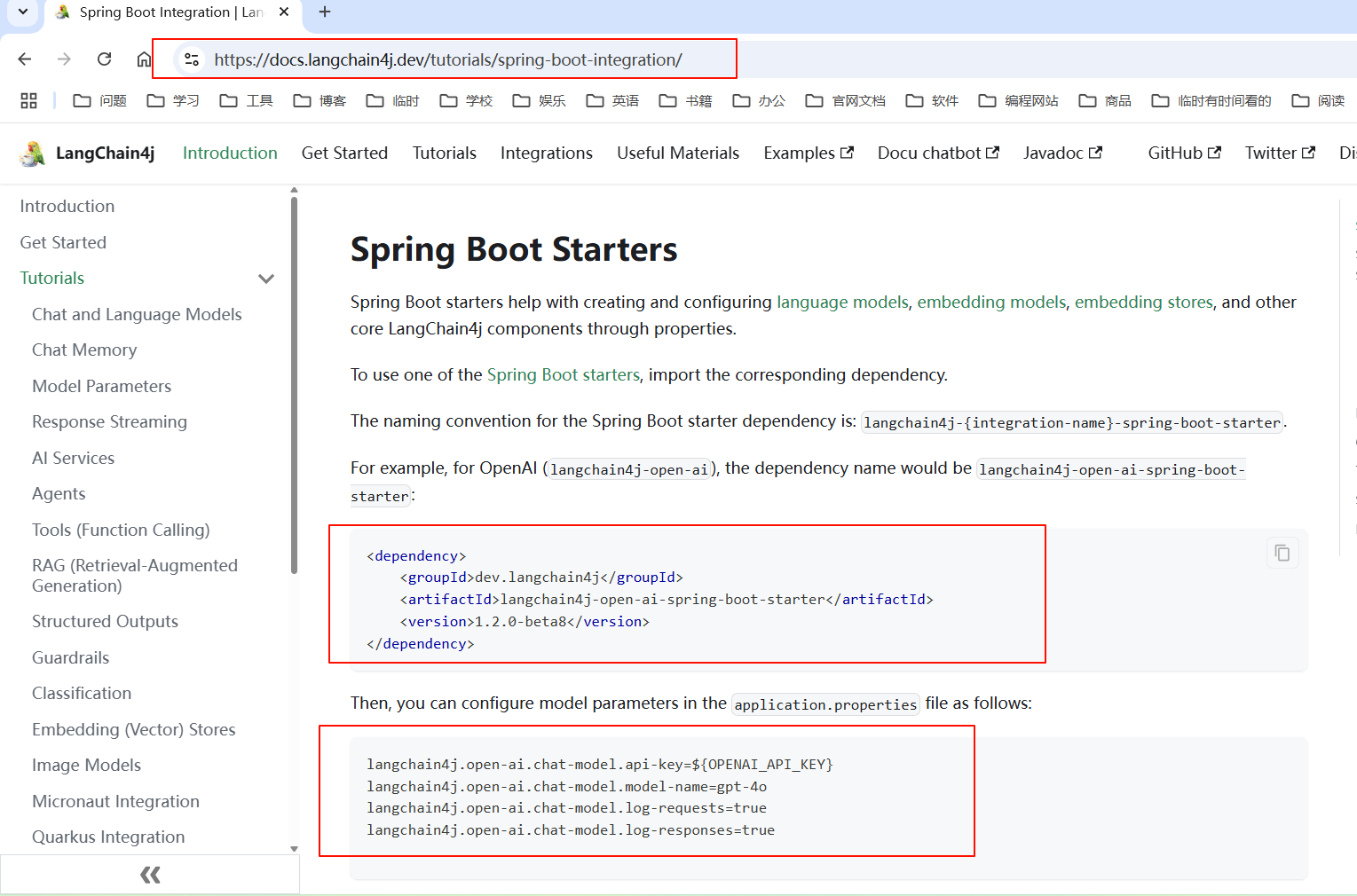

LangChain4j 整合 SpringBoot 官方文档:https://docs.langchain4j.dev/tutorials/spring-boot-integration/

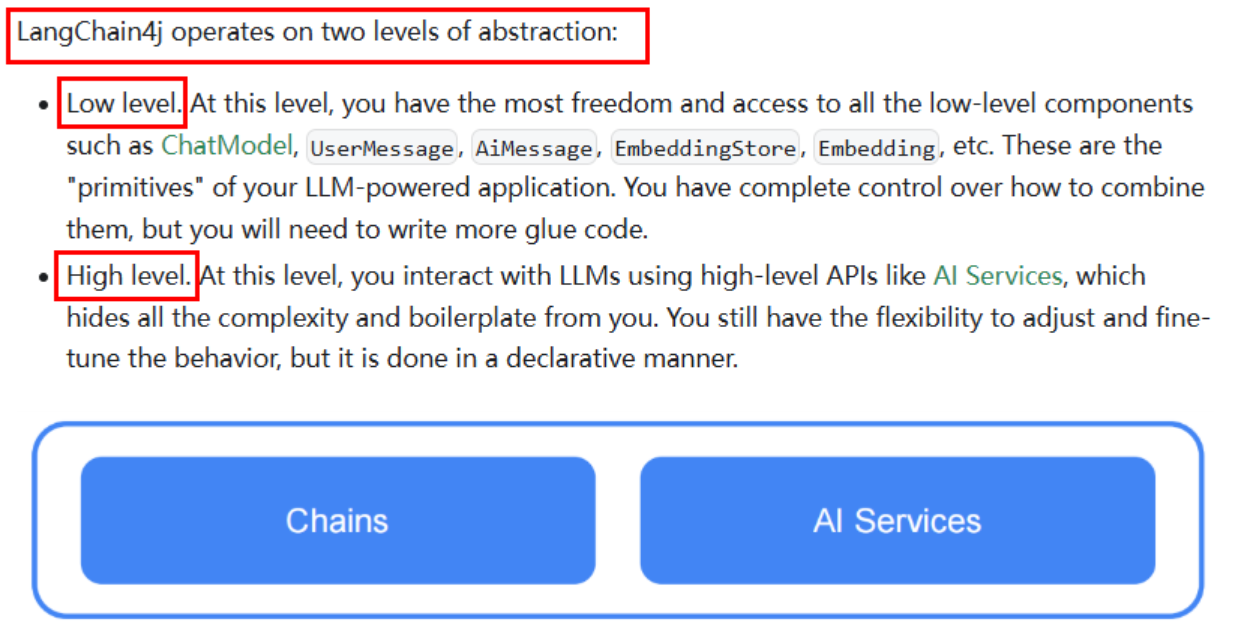

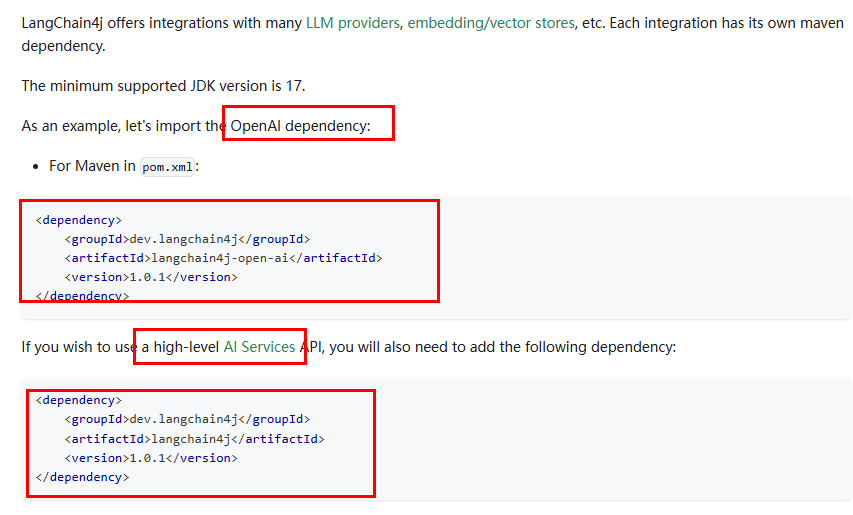

浅谈—下:LangChain4j twolevels of abstraction

低阶 APi 和 高阶 API

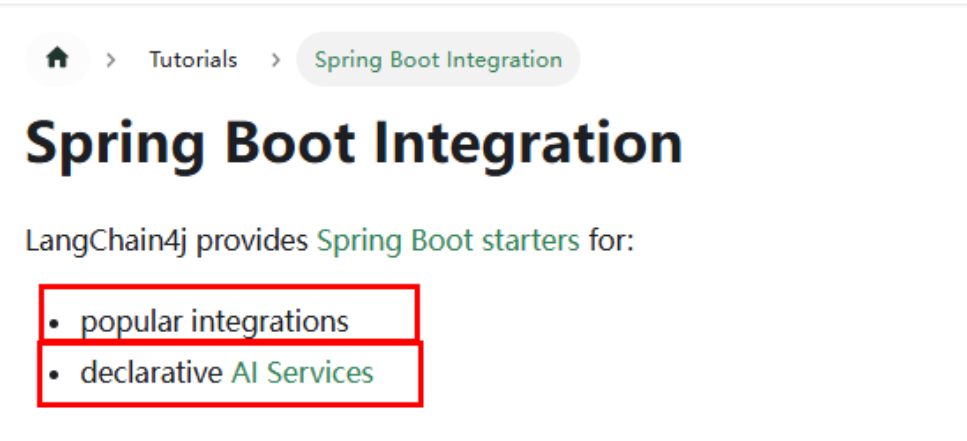

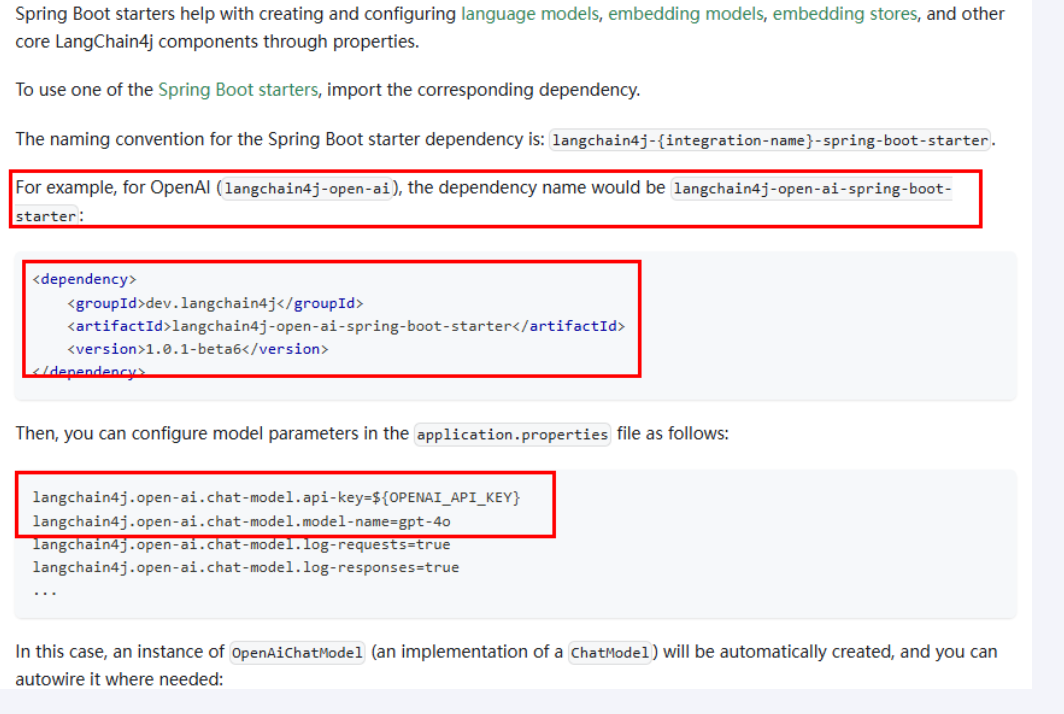

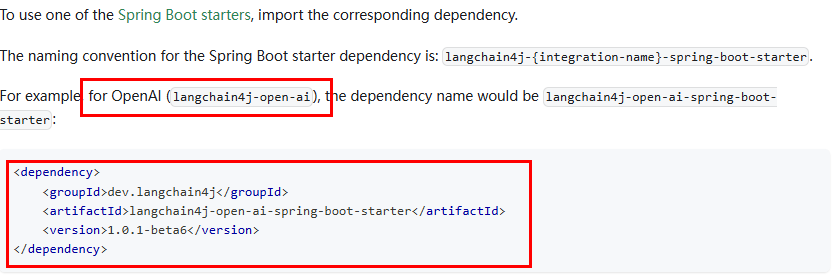

Spring Boot整合底阶API所需POM:

dev.langchain4j

langchain4j-open-ai-spring-boot-starter

1.2.0-beta8

langchain4j.open-ai.chat-model.api-key=${OPENAI_API_KEY}

langchain4j.open-ai.chat-model.model-name=gpt-4o

langchain4j.open-ai.chat-model.log-requests=true

langchain4j.open-ai.chat-model.log-responses=true

...

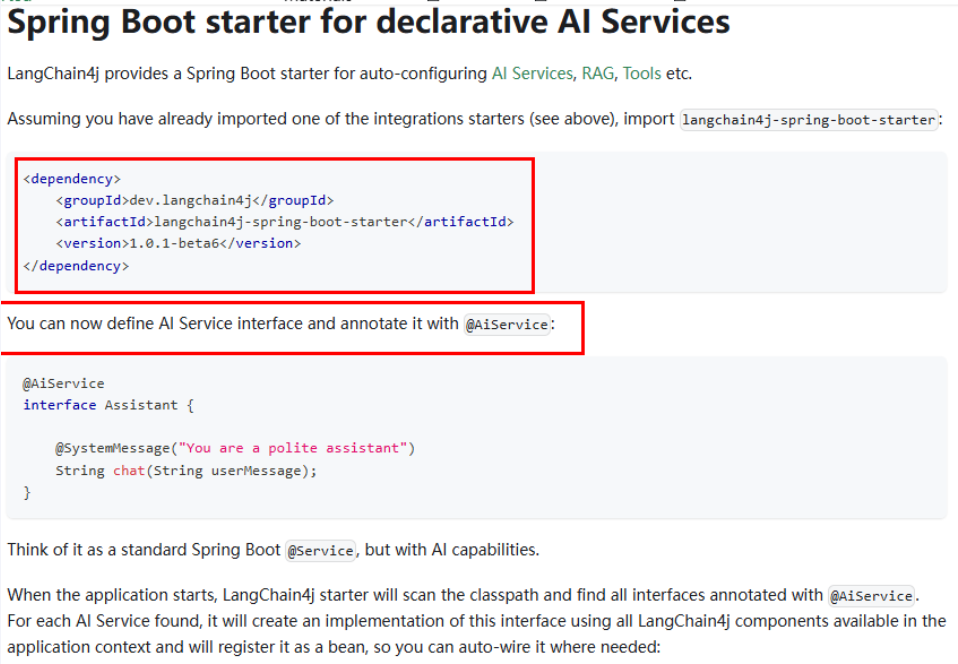

Spring Boot整合高阶API所需POM:

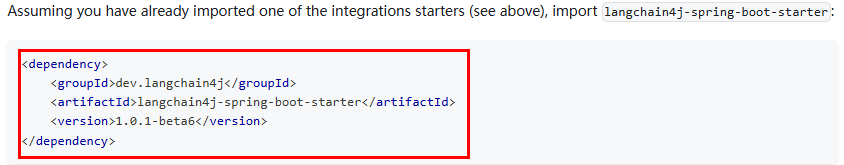

截至目前,存在两种整合 Spring Boot 的方式:

LangChain4J 原生整合:

LangChain4J + Spring Boot 整合:

小总结:

org.springframework.boot

spring-boot-starter-web

dev.langchain4j

langchain4j-open-ai-spring-boot-starter

dev.langchain4j

langchain4j-spring-boot-starter

server.port=9008

spring.application.name=langchain4j-08boot-integration

# 设置响应的字符编码,避免流式返回输出乱码

server.servlet.encoding.charset=utf-8

server.servlet.encoding.enabled=true

server.servlet.encoding.force=true

# https://docs.langchain4j.dev/tutorials/spring-boot-integration

#langchain4j.open-ai.chat-model.api-key=${aliQwen-api}

#langchain4j.open-ai.chat-model.model-name=qwen-plus

#langchain4j.open-ai.chat-model.base-url=https://dashscope.aliyunccnblogs.com/compatible-mode/v1

# 大模型调用不可以明文配置,你如何解决该问题

# 1 yml: ${aliQwen-api},从环境变量读取

# 2 config配置类: System.getenv("aliQwen-api")从环境变量读取

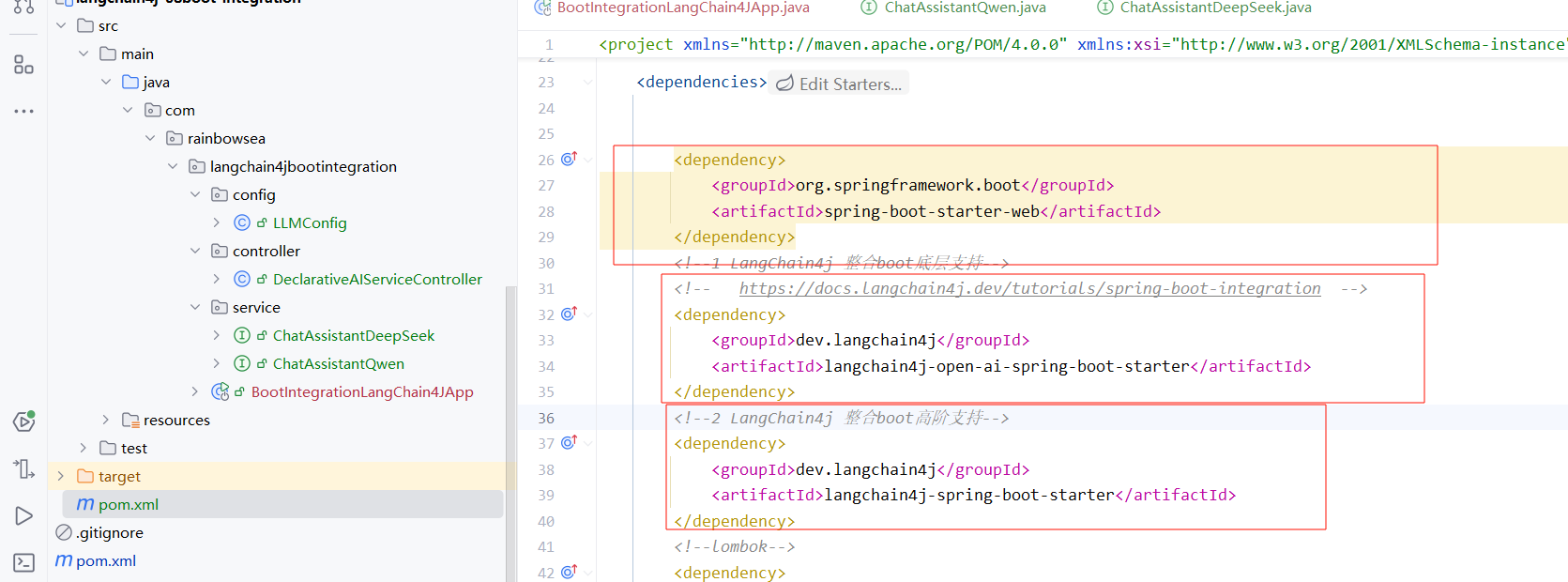

这里我们测试操作两个大模型:DeepSeek,通义千问。

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantQwen;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantDeepSeek;

import dev.langchain4j.model.chat.ChatModel;

import dev.langchain4j.model.openai.OpenAiChatModel;

import dev.langchain4j.service.AiServices;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @Description: 知识出处 https://docs.langchain4j.dev/get-started

*/

@Configuration

public class LLMConfig {

@Bean(name = "qwen")

public ChatModel chatModelQwen() {

return OpenAiChatModel.builder()

.apiKey(System.getenv("aliQwen_api"))

.modelName("qwen-plus")

.baseUrl("https://dashscope.aliyunccnblogs.com/compatible-mode/v1")

.build();

}

/**

* @Description: 知识出处,https://api-docs.deepseek.com/zh-cn/

*/

@Bean(name = "deepseek")

public ChatModel chatModelDeepSeek() {

return

OpenAiChatModel.builder()

.apiKey(System.getenv("deepseek_api"))

.modelName("deepseek-chat")

//.modelName("deepseek-reasoner")

.baseUrl("https://api.deepseek.com/v1")

.build();

}

}

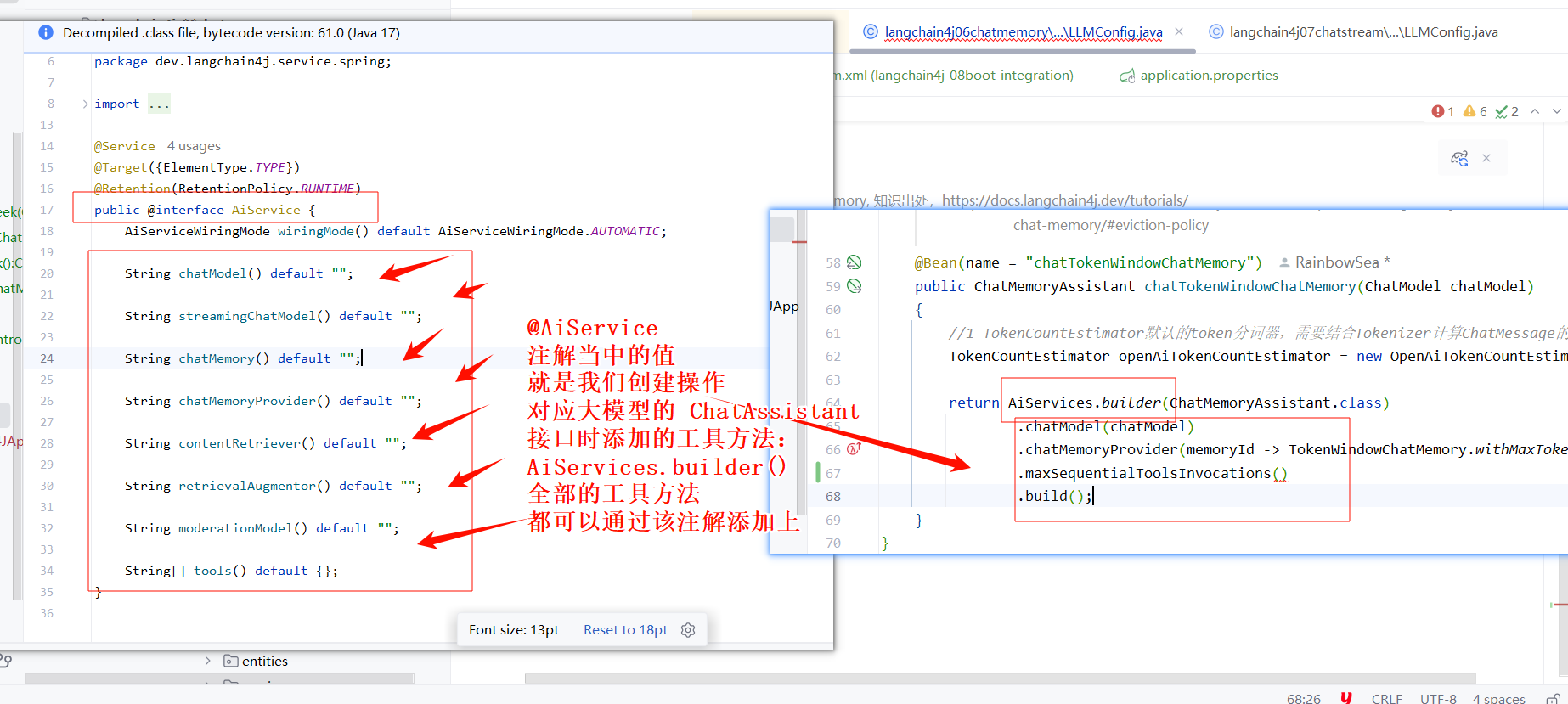

@AiService 注解的源码如下:

//

// Source code recreated from a .class file by IntelliJ IDEA

// (powered by FernFlower decompiler)

//

package dev.langchain4j.service.spring;

import java.lang.annotation.ElementType;

import java.lang.annotation.Retention;

import java.lang.annotation.RetentionPolicy;

import java.lang.annotation.Target;

import org.springframework.stereotype.Service;

@Service

@Target({ElementType.TYPE})

@Retention(RetentionPolicy.RUNTIME)

public @interface AiService {

AiServiceWiringMode wiringMode() default AiServiceWiringMode.AUTOMATIC;

String chatModel() default "";

String streamingChatModel() default "";

String chatMemory() default "";

String chatMemoryProvider() default "";

String contentRetriever() default "";

String retrievalAugmentor() default "";

String moderationModel() default "";

String[] tools() default {};

}

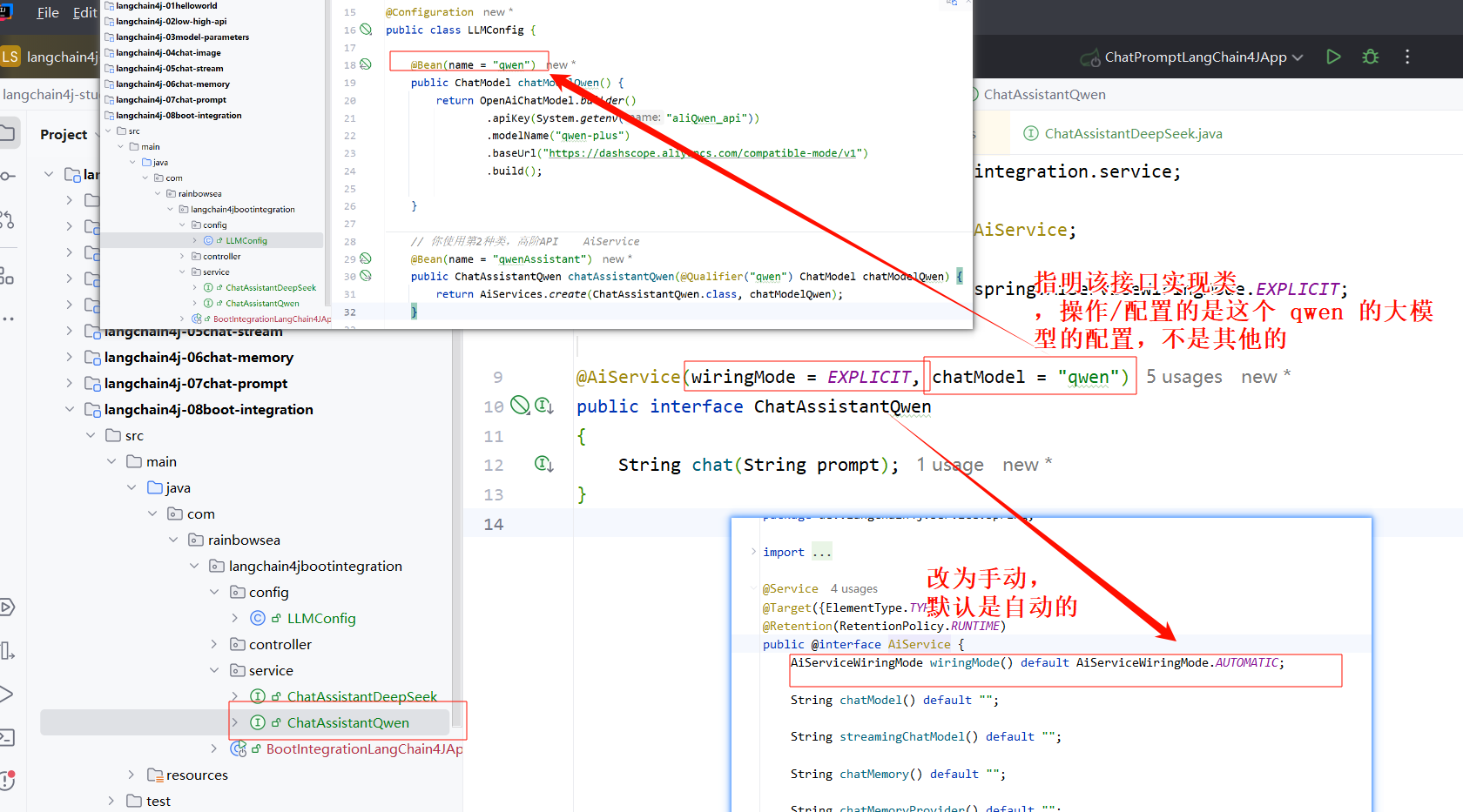

package com.rainbowsea.langchain4jbootintegration.service;

import dev.langchain4j.service.spring.AiService;

import static dev.langchain4j.service.spring.AiServiceWiringMode.EXPLICIT;

/**

*/

@AiService(wiringMode = EXPLICIT, chatModel = "qwen")

public interface ChatAssistantQwen

{

String chat(String prompt);

}

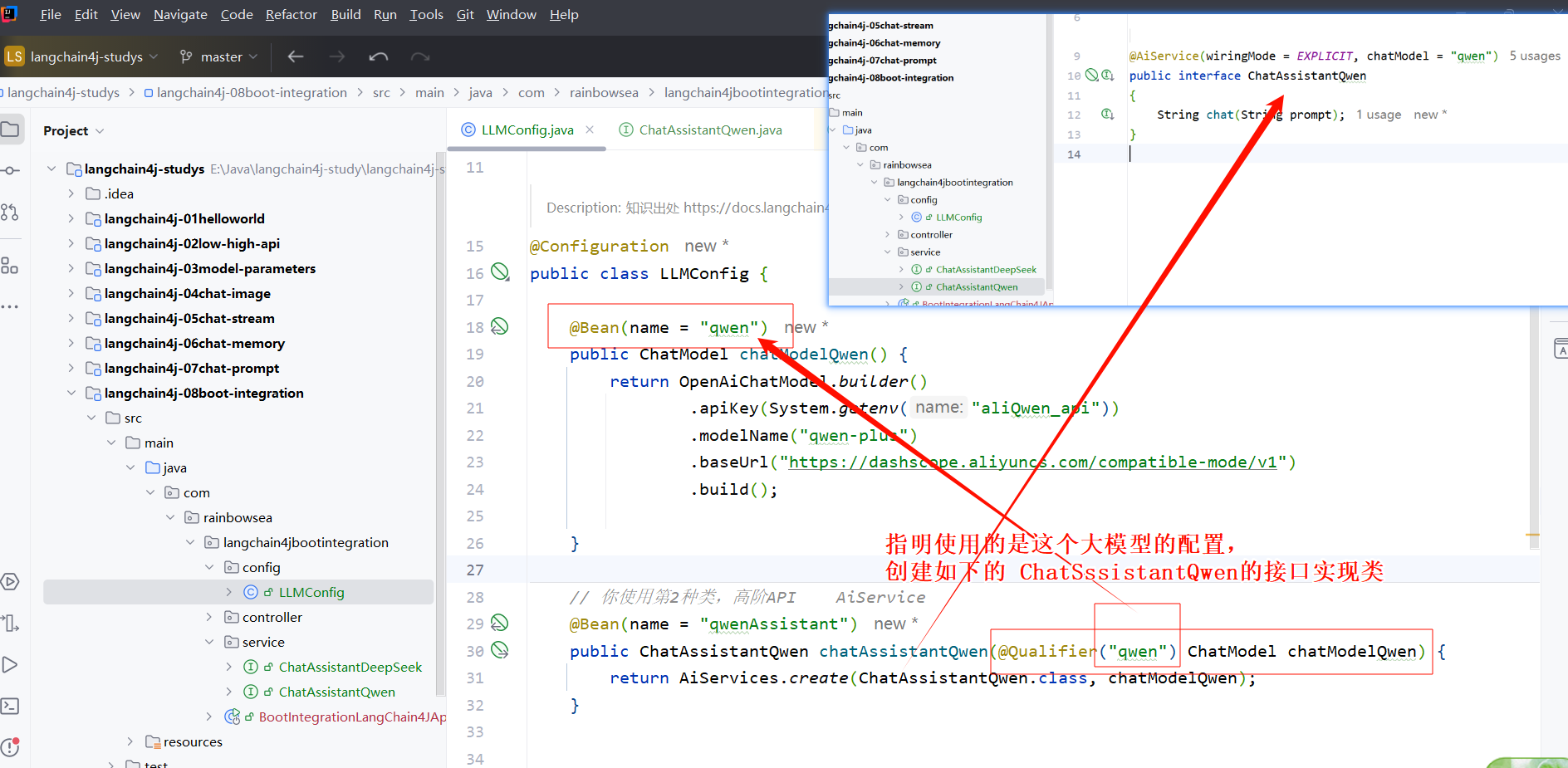

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantQwen;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantDeepSeek;

import dev.langchain4j.model.chat.ChatModel;

import dev.langchain4j.model.openai.OpenAiChatModel;

import dev.langchain4j.service.AiServices;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @Description: 知识出处 https://docs.langchain4j.dev/get-started

*/

@Configuration

public class LLMConfig {

@Bean(name = "qwen")

public ChatModel chatModelQwen() {

return OpenAiChatModel.builder()

.apiKey(System.getenv("aliQwen_api"))

.modelName("qwen-plus")

.baseUrl("https://dashscope.aliyunccnblogs.com/compatible-mode/v1")

.build();

}

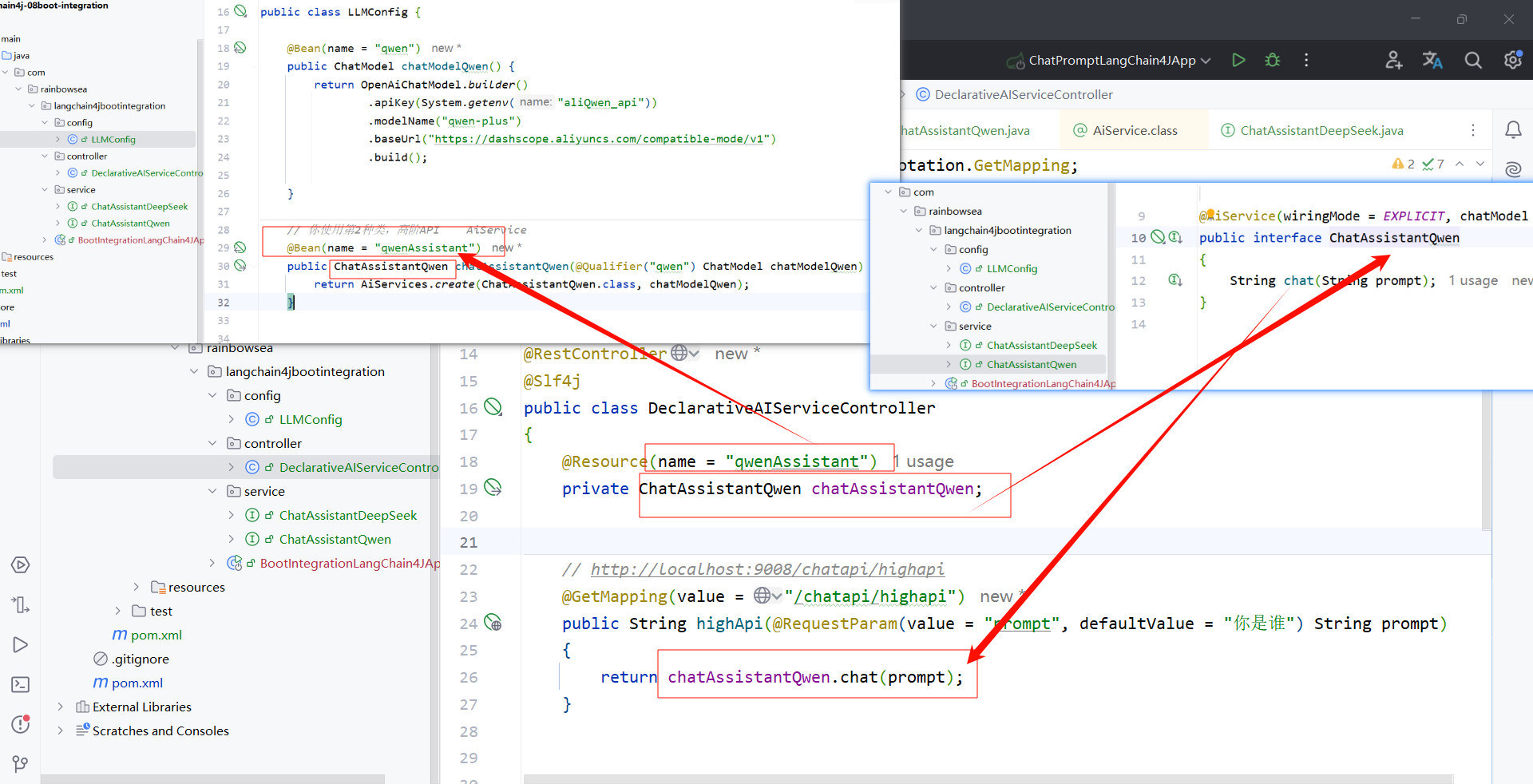

// 你使用第2种类,高阶API AiService

@Bean(name = "qwenAssistant")

public ChatAssistantQwen chatAssistantQwen(@Qualifier("qwen") ChatModel chatModelQwen) {

return AiServices.create(ChatAssistantQwen.class, chatModelQwen);

}

}

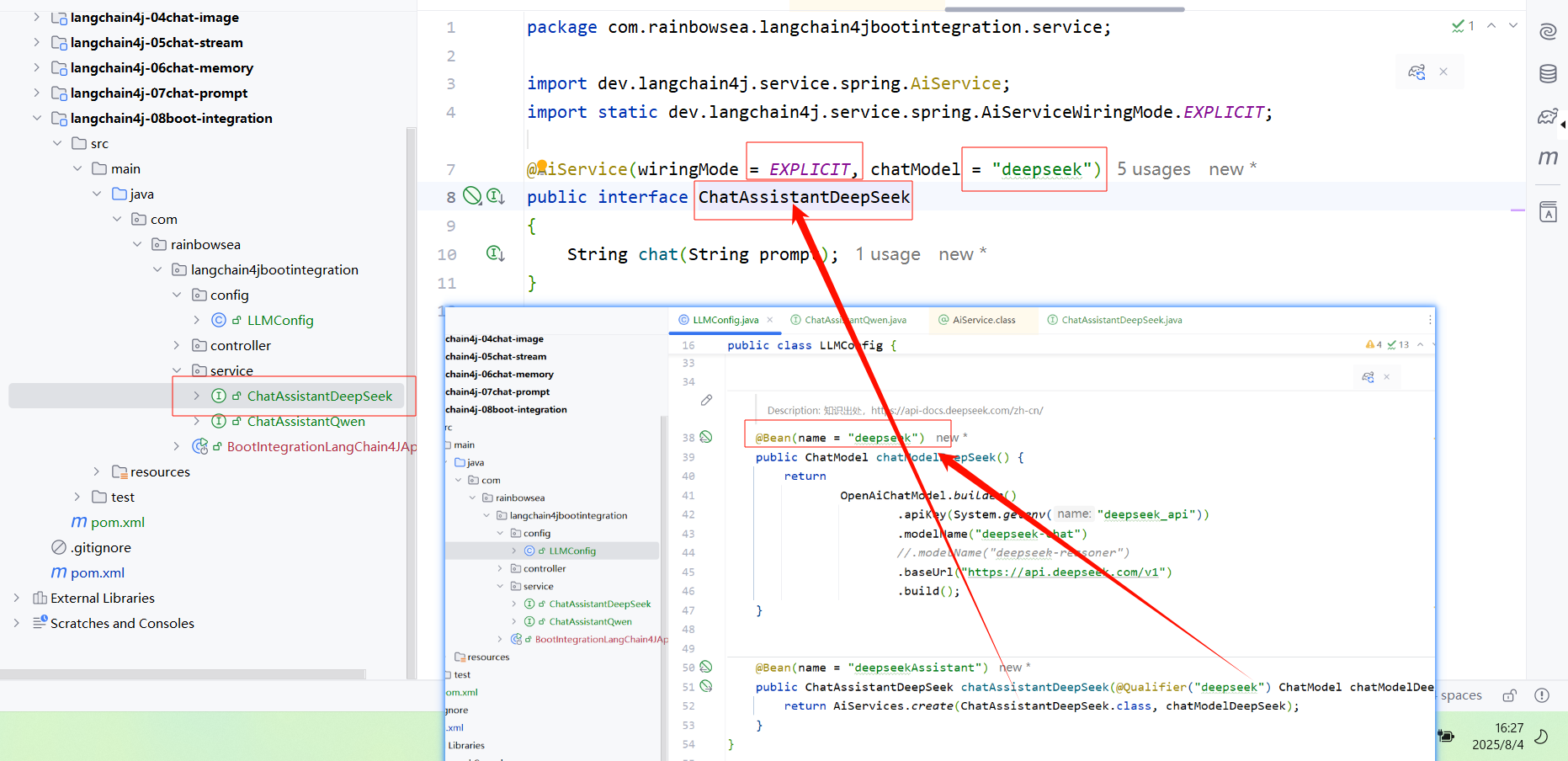

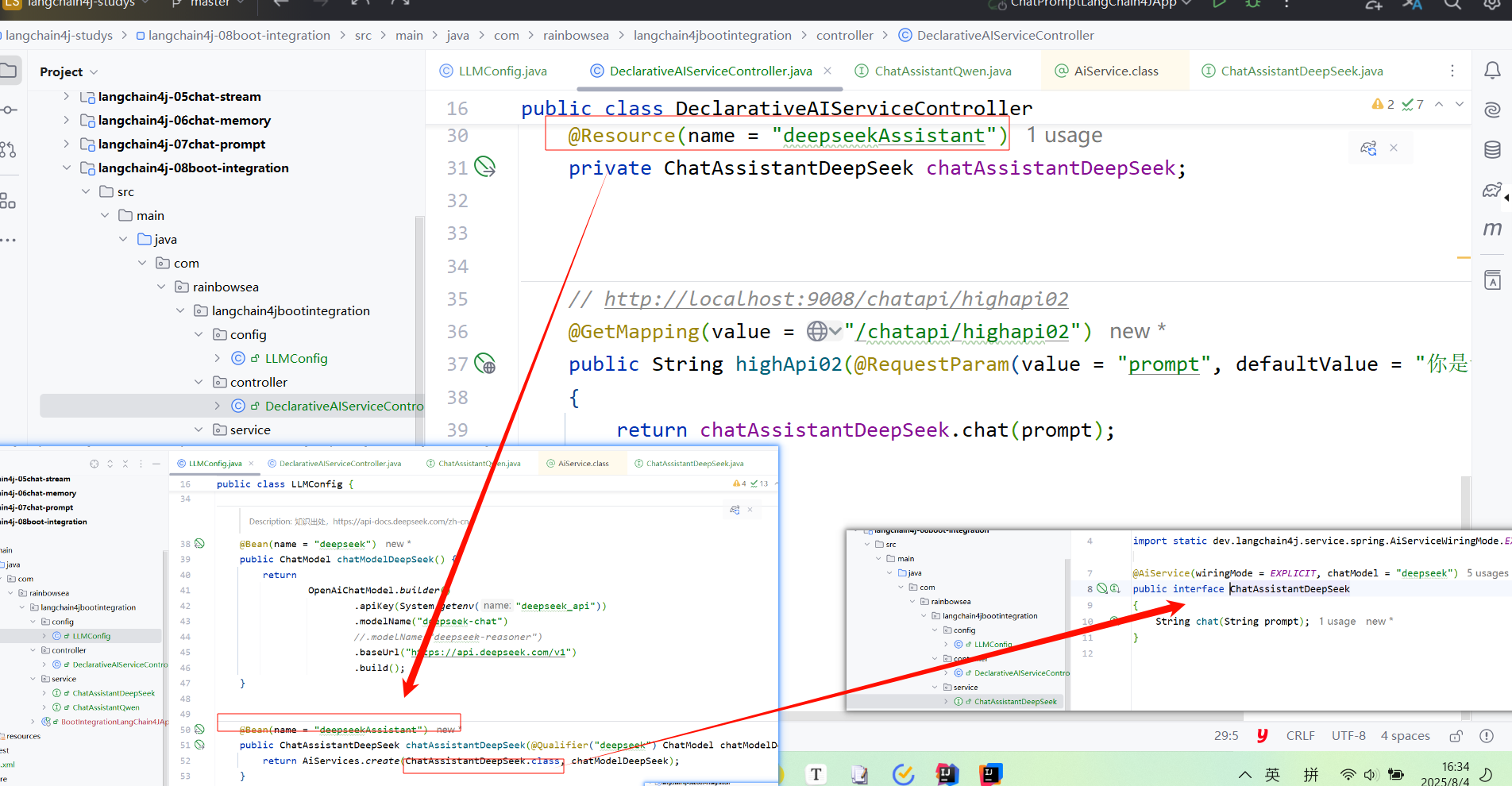

同理我们添加上 DeepSeek 操作的接口类,以及对应大模型的实现类

package com.rainbowsea.langchain4jbootintegration.service;

import dev.langchain4j.service.spring.AiService;

import static dev.langchain4j.service.spring.AiServiceWiringMode.EXPLICIT;

/**

*/

@AiService(wiringMode = EXPLICIT, chatModel = "deepseek")

public interface ChatAssistantDeepSeek

{

String chat(String prompt);

}

package com.rainbowsea.langchain4jbootintegration.config;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantQwen;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantDeepSeek;

import dev.langchain4j.model.chat.ChatModel;

import dev.langchain4j.model.openai.OpenAiChatModel;

import dev.langchain4j.service.AiServices;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @Description: 知识出处 https://docs.langchain4j.dev/get-started

*/

@Configuration

public class LLMConfig {

/**

* @Description: 知识出处,https://api-docs.deepseek.com/zh-cn/

*/

@Bean(name = "deepseek")

public ChatModel chatModelDeepSeek() {

return

OpenAiChatModel.builder()

.apiKey(System.getenv("deepseek_api"))

.modelName("deepseek-chat")

//.modelName("deepseek-reasoner")

.baseUrl("https://api.deepseek.com/v1")

.build();

}

@Bean(name = "deepseekAssistant")

public ChatAssistantDeepSeek chatAssistantDeepSeek(@Qualifier("deepseek") ChatModel chatModelDeepSeek) {

return AiServices.create(ChatAssistantDeepSeek.class, chatModelDeepSeek);

}

}

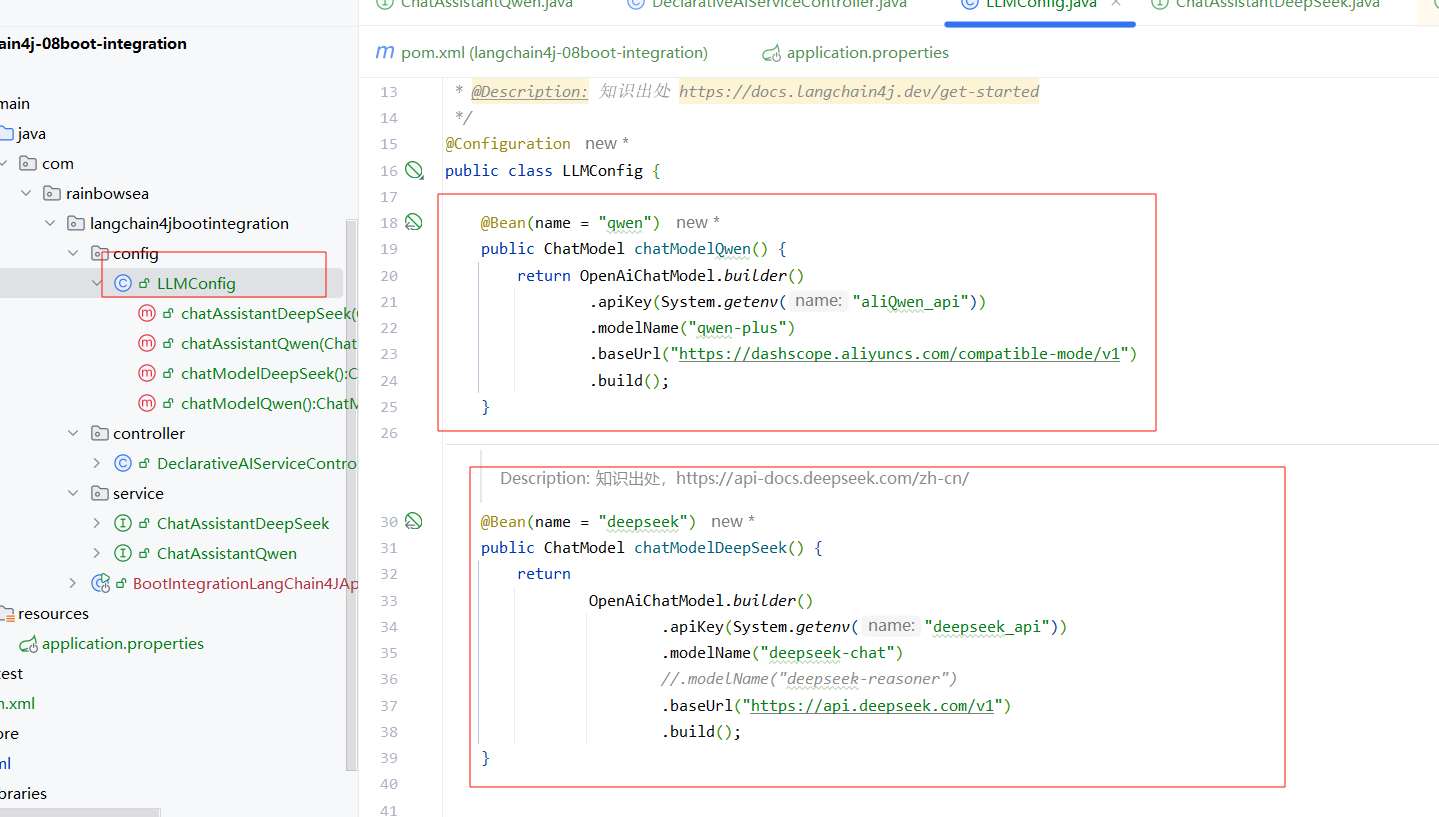

DeepSeek + 通义千问

package com.rainbowsea.langchain4jbootintegration.config;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantQwen;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantDeepSeek;

import dev.langchain4j.model.chat.ChatModel;

import dev.langchain4j.model.openai.OpenAiChatModel;

import dev.langchain4j.service.AiServices;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

/**

* @Description: 知识出处 https://docs.langchain4j.dev/get-started

*/

@Configuration

public class LLMConfig {

@Bean(name = "qwen")

public ChatModel chatModelQwen() {

return OpenAiChatModel.builder()

.apiKey(System.getenv("aliQwen_api"))

.modelName("qwen-plus")

.baseUrl("https://dashscope.aliyunccnblogs.com/compatible-mode/v1")

.build();

}

// 你使用第2种类,高阶API AiService

@Bean(name = "qwenAssistant")

public ChatAssistantQwen chatAssistantQwen(@Qualifier("qwen") ChatModel chatModelQwen) {

return AiServices.create(ChatAssistantQwen.class, chatModelQwen);

}

/**

* @Description: 知识出处,https://api-docs.deepseek.com/zh-cn/

*/

@Bean(name = "deepseek")

public ChatModel chatModelDeepSeek() {

return

OpenAiChatModel.builder()

.apiKey(System.getenv("deepseek_api"))

.modelName("deepseek-chat")

//.modelName("deepseek-reasoner")

.baseUrl("https://api.deepseek.com/v1")

.build();

}

@Bean(name = "deepseekAssistant")

public ChatAssistantDeepSeek chatAssistantDeepSeek(@Qualifier("deepseek") ChatModel chatModelDeepSeek) {

return AiServices.create(ChatAssistantDeepSeek.class, chatModelDeepSeek);

}

}

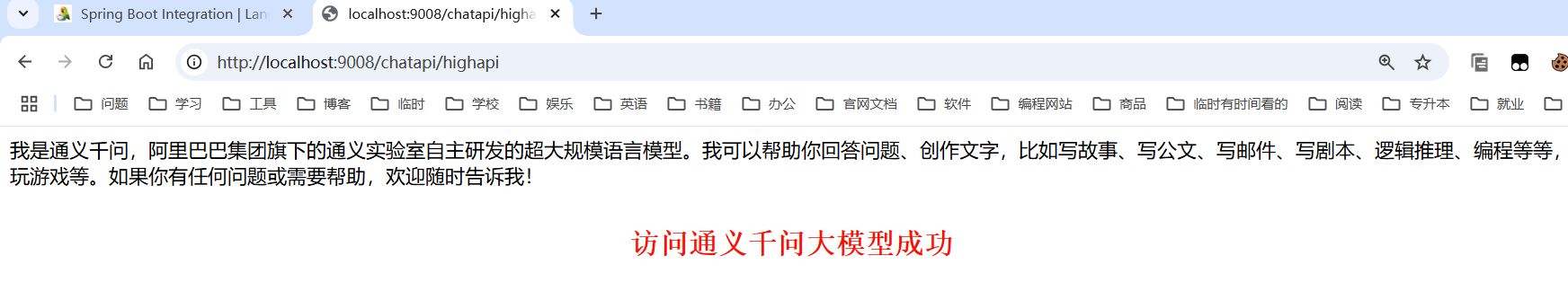

操作访问通义千问。

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantDeepSeek;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantQwen;

import jakarta.annotation.Resource;

import lombok.extern.slf4j.Slf4j;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

/**

* @Description: https://docs.langchain4j.dev/tutorials/spring-boot-integration

*/

@RestController

@Slf4j

public class DeclarativeAIServiceController

{

@Resource(name = "qwenAssistant")

private ChatAssistantQwen chatAssistantQwen;

// http://localhost:9008/chatapi/highapi

@GetMapping(value = "/chatapi/highapi")

public String highApi(@RequestParam(value = "prompt", defaultValue = "你是谁") String prompt)

{

return chatAssistantQwen.chat(prompt);

}

}

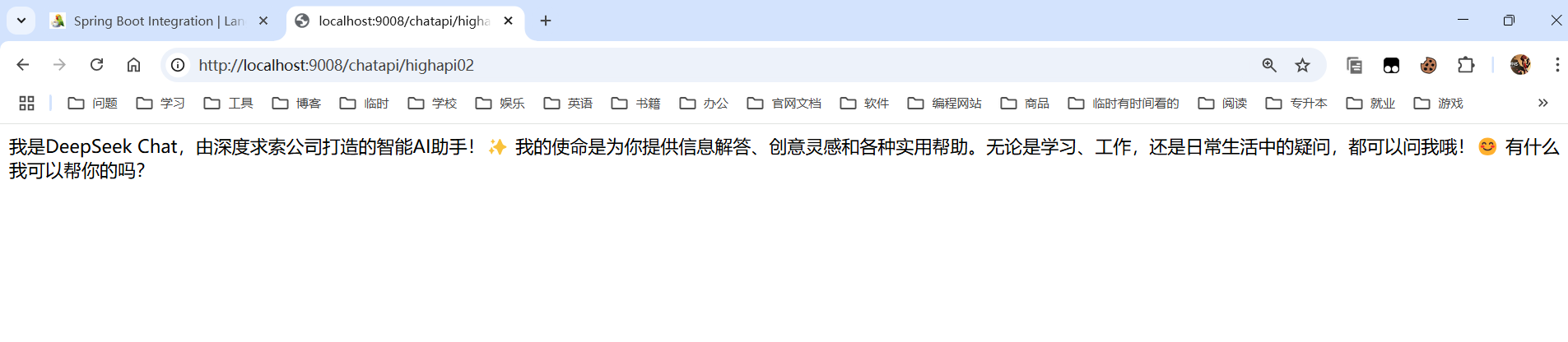

操作访问 DeepSeek

package com.rainbowsea.langchain4jbootintegration.controller;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantDeepSeek;

import com.rainbowsea.langchain4jbootintegration.service.ChatAssistantQwen;

import jakarta.annotation.Resource;

import lombok.extern.slf4j.Slf4j;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

/**

* @Description: https://docs.langchain4j.dev/tutorials/spring-boot-integration

*/

@RestController

@Slf4j

public class DeclarativeAIServiceController

{

@Resource(name = "deepseekAssistant")

private ChatAssistantDeepSeek chatAssistantDeepSeek;

// http://localhost:9008/chatapi/highapi02

@GetMapping(value = "/chatapi/highapi02")

public String highApi02(@RequestParam(value = "prompt", defaultValue = "你是谁") String prompt)

{

return chatAssistantDeepSeek.chat(prompt);

}

}

“在这个最后的篇章中,我要表达我对每一位读者的感激之情。你们的关注和回复是我创作的动力源泉,我从你们身上吸取了无尽的灵感与勇气。我会将你们的鼓励留在心底,继续在其他的领域奋斗。感谢你们,我们总会在某个时刻再次相遇。”

登录查看全部

登录查看全部

参与评论

手机查看

返回顶部